In the past few years, volunteers have produced geographic information of different kinds, using a variety of different crowdsourcing platforms, within a broad range of contexts. However, there is still a lack of clarity about the specific types of tasks that volunteers can perform for deriving geographic information from remotely sensed imagery, and how the quality of the produced information can be assessed for particular task types. To fill this gap, we analyse the existing literature and propose a typology of tasks in geographic information crowdsourcing, which distinguishes between classification, digitisation and conflation tasks. We then present a case study related to the “Missing Maps” project aimed at crowdsourced classification to support humanitarian aid.

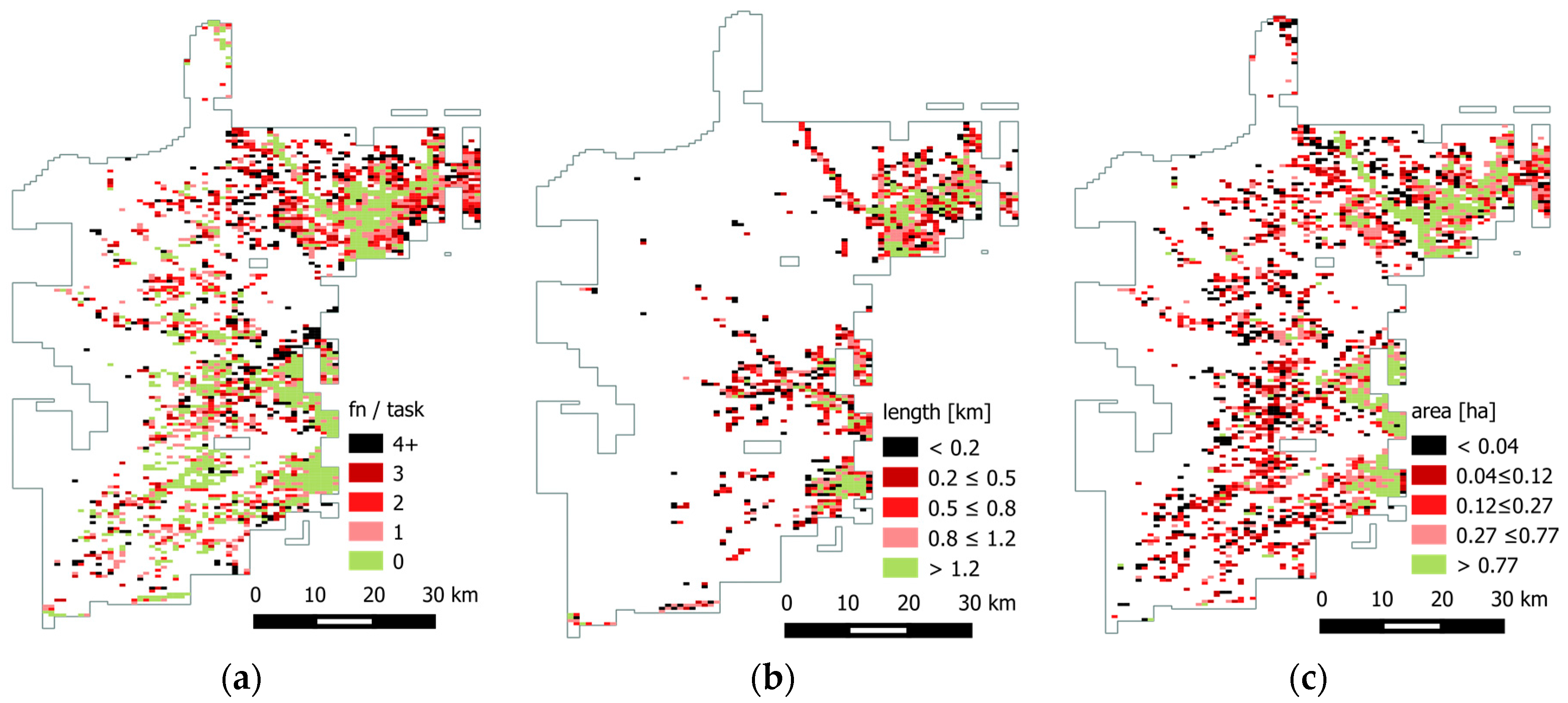

We use our typology to distinguish between the different types of crowdsourced tasks in the project and choose classification tasks related to identifying roads and settlements for an evaluation of the crowdsourced classification. This evaluation shows that the volunteers achieved a satisfactory overall performance (accuracy: 89%; sensitivity: 73%; and precision: 89%). We also analyse different factors that could influence the performance, concluding that volunteers were more likely to incorrectly classify tasks with small objects. Furthermore, agreement among volunteers was shown to be a very good predictor of the reliability of crowdsourced classification: tasks with the highest agreement level were 41 times more probable to be correctly classified by volunteers. The results thus show that the crowdsourced classification of remotely sensed imagery is able to generate geographic information about human settlements with a high level of quality. This study also makes clear the different sophistication levels of tasks that can be performed by volunteers and reveals some factors that may have an impact on their performance.

http://www.geog.uni-heidelberg.de/gis/publikationen_journals_en.html

Porto de Albuquerque, J., B. Herfort, M. Eckle (2016): The Tasks of the Crowd: A Typology of Tasks in Geographic Information Crowdsourcing and a Case Study in Humanitarian Mapping. Remote Sensing. 2016, 8(10), 859; doi:10.3390/rs8100859.