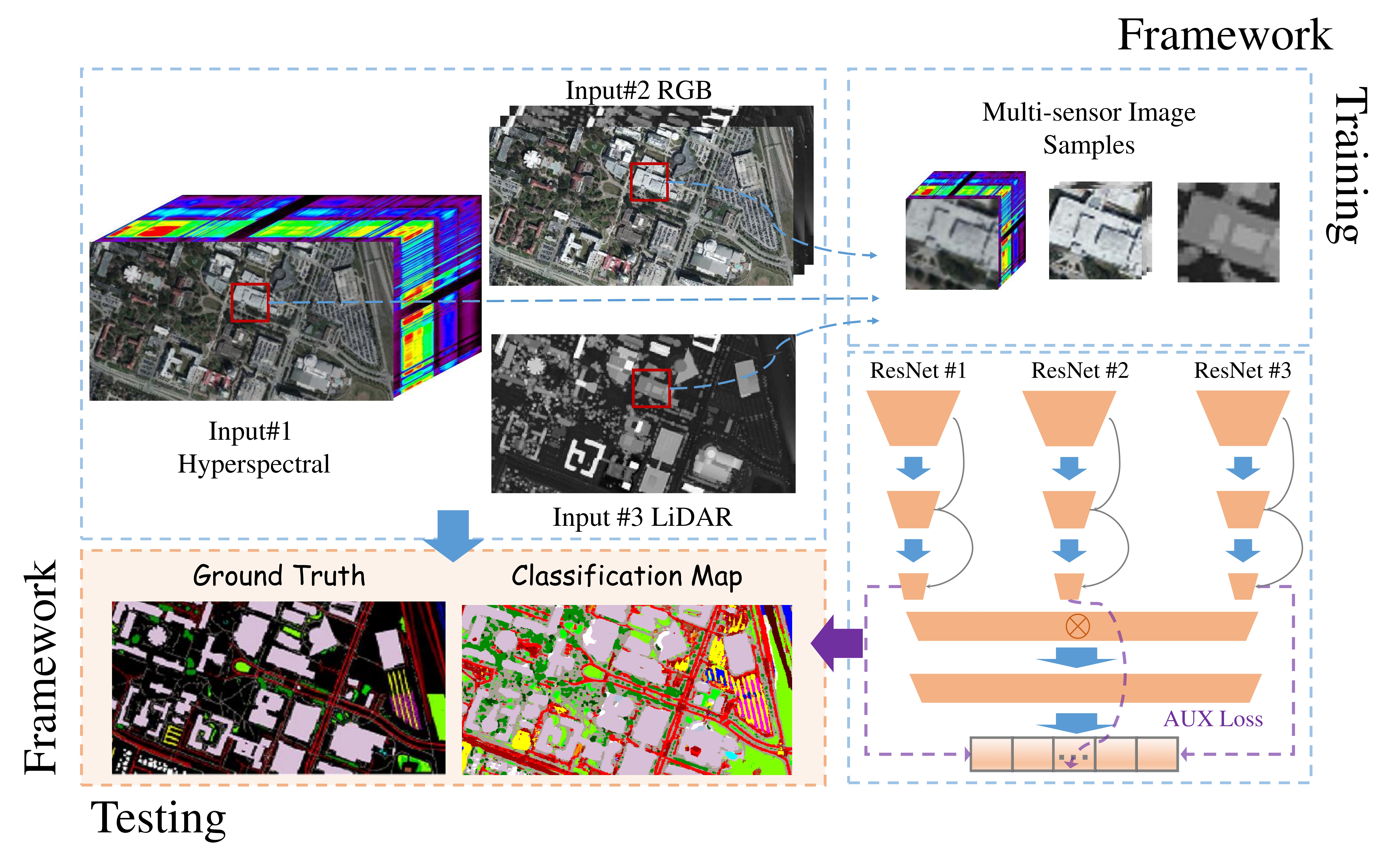

Multi-sensor remote sensing image classification has been considerably improved by deep learning feature extraction and classification networks. In this recent paper, we propose a novel multi-sensor fusion framework (CResNet-AUX) for the fusion of diverse remote sensing data sources. The novelty of this paper is grounded in three important design innovations:

- A unique adaptation of the coupled residual networks to address multi-sensor data classification;

- A smart auxiliary training via adjusting the loss function to address classifications with limited samples;

- A unique design of the residual blocks to reduce the computational complexity while preserving the discriminative characteristics of multi-sensor features.

The proposed classification framework is evaluated using three different remote sensing datasets: the urban Houston university datasets (including Houston 2013 and the training portion of Houston 2018) and the rural Trento dataset. The proposed framework achieves high overall accuracies of 93.57%, 81.20%, and 98.81% on Houston 2013, the training portion of Houston 2018, and Trento datasets, respectively. Additionally, the experimental results demonstrate considerable improvements in classification accuracies compared with the existing state-of-the-art methods.

More importantly, the proposed CResNet-AUX is designed to be a fully automatic generalized multi-sensor fusion framework, where the network architecture is largely independent from the input data types and not limited to specific sensor systems. Our framework is applicable to a wide range of multi-sensor datasets in an end-to-end, wall-to-wall manner.

Future works in developing intelligent and robust multi-sensor fusion methods may benefit from the insights we have produced in this paper. In further research, we propose to test the performance of our framework on a large-scale application (e.g., continental and/or planetary land use land cover classification) and include additional types of remote sensing data. Find more details in the paper:

Li, H.; Ghamisi, P.; Rasti, B.; Wu, Z.; Shapiro, A.; Schultz, M.; Zipf, A. (2020) A Multi-Sensor Fusion Framework Based on Coupled Residual Convolutional Neural Networks. Remote Sensing. 12, 2067. https://doi.org/10.3390/rs12122067